YouTube announced today (14) new restrictions on publishing videos with content generated by artificial intelligence. From now on, creators will be forced to say when their videos contain this type of content to prevent viewers from being deceived.

The new requirements have already been integrated into YouTube’s Creator Studio, which was recently launched in Brazil. In the steps to publish a video, the user is asked whether the material has any content that could be confused with a person, place or event altered with artificial intelligence, such as deepfakes.

In better context, a deepfake is when a person has their face removed or altered in a video to look like someone else or to appear as if they are saying something they never actually said.

Although this technology may seem fun for some purposes, it will pose a great danger during the US presidential elections as people can fake videos of candidates.

If the video has any realistic elements generated with artificial intelligence, YouTube will add a label to let users know that the content is not real. The labels will initially appear in the Android and iOS app, but will also be added to YouTube for desktop and TV soon.

Google

28 Feb

Google

27 Feb

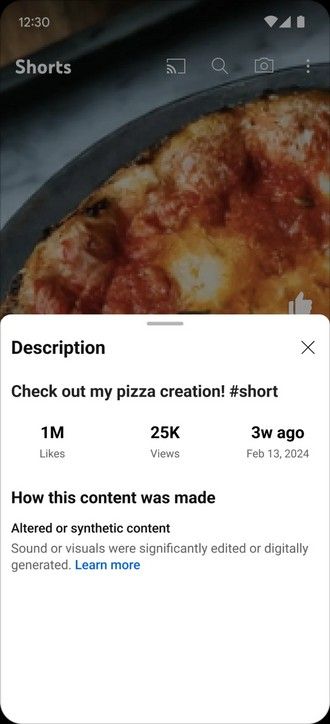

AI-powered video flags will be shown in the player and description, but videos that cover sensitive topics like news, health, and politics will have more prominent labels so viewers aren’t fooled into thinking the scenes or events are real.

In the same sector, OpenAI, owner of ChatGPT, is also taking measures to combat the use of the tool to create fake news during the US elections.

YouTube

Developer: Google

Free – offers in-app purchases

Size: Varies depending on platform

Tags: YouTube forces creators identify videos modified artificial intelligence